Update 1.68 (and 1.67, 1.66, 1.65, 1.64, 1.63…)

We’ve not done a big update in a while, and this isn’t a big update either. Instead we’ve been doing lots of small updates, so this is a quick announcement post to get everyone up to speed with the things that have changed over the last couple of months. Let’s face it, you don’t want to read a post with 20 words on it (I mean, you barely want to read this one as it is).

These ones below would constitute updates 1.66, 1.67 & 1.68:

Improved: Robots Checking

The improvements that you really wanted, you just didn’t know it. By ticking the aptly named tickbox ‘Robots Access’, URL Profiler will now check every URL in your list for all of the following:

- Robots.txt Disallowed

- Robots.txt Noindex

- No. Robots Tags

- Robots Noindex

- Robots Nofollow

- Robots Meta

- Robots HTTP Header

- Canonical HTTP Header

- Canonical Head

You’re welcome.

Fixed: My-Addr API Error

Those of you who had braved the gauntlet of My-Addr’s website and managed to sign up for their API, we salute you. After all that effort, you might have been chastened to learn that the My-Addr API stopped working the other day, completely.

It turns out, they had shifted their protocols from http to https, as is their wont. Of course, they didn’t feel it necessary to actually tell anyone about it.

So we found it and fixed it up.

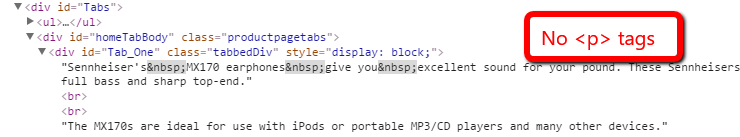

Fixed: Duplicate Content Checker – Div Issue

A shoutout to Wayne Barker for spotting this one, winning himself a Cadbury’s Double Decker in the process.

I should spare you the gory details, but I won’t. This issue was due to a small bug in the Duplicate Content Checker – specifically, when a content area div is specified, and there is no text in said content area within <p> tags. The tool would return ‘Not Checked’, instead of falling back to look for text outside of <p> tags.

What the tool was actually doing was falling back to look for <body> and trying to pick up all the text in there (which would be appropriate if you are just checking a page, and not specifying a content area div). When you specify a div, however, there IS NO SPOON. I mean there is no <body>. So it couldn’t find any text.

Fixed: Custom Scraper XPath Issue

Occasionally, when using the Custom Scraper to scrape with XPath selectors, the data would get mixed up in the export. So some rows would repeat data from other rows, and not correspond to the correct URLs.

It was only doing it with XPath, as the threads sometimes end up sharing the same HTML element.

That explains that then. All fixed now.

Removed: PageRank Buttons

Toolbar PageRank officially went dark a couple of months ago, so the option to check it in URL Profiler became completely redundant.

We kept the buttons present in our UI as we didn’t want to ruin the balanced aesthetic of our interface…

But all good things come to an end, so they’ve gone now.

A Few Older Updates

Since I haven’t written a post about these already, these ones below are from updates 1.63, 1.64 & 1.65:

Changed: Messaging for Proxy Supplier

The proxy provider we had been recommending started to let us (and our users) down, badly. So we dropped them, and found a new supplier to recommend.

If you are at all interested in using proxies with URL Profiler, I suggest you read our guide – Using Proxies with URL Profiler.

Fixed: Moz API Issues for Paid subscribers.

This relates to proper paid API users, as in $500/month minimum just on the API. The tool was getting stuck when lots of URLs were entered, as the Moz API can’t actually handle 200 requests a second without using multiple threading, so we’ve had to slow down the rate at which requests are made.

Fixed: Issue Where Trailing Slash Was Being Added to Original URL

This was a weird one. At some point we appear to have introduced an odd little quirk where we would add a trailing slash to the ‘Original URLs’ in a list. Since people sometimes need this original URL in order to match against (for VLOOKUPs and whatnot), we needed to stop doing this. So we did.

Improved: Stability of .NET Platform (Windows)

As the internet is moving towards HTTPS, some websites now only accept TLS 1.1 or above, so older browsers are not able to connect.

Our software was experiencing a similar issue, until we updated to latest .NET platform, which handles all this obscure security stuff without batting an eyelid.

Downloads

Existing customers or existing trial users can grab the new update from here:

If you’ve not tried URL Profiler yet, you can start a free 14 day trial here. The trial is full featured, and you don’t need to give us any payment details to get started.